What are AI Agents? why do they matter?

Understanding the evolution, architecture and future of AI Agents

An agent is a program that autonomously completes tasks or makes decisions based on data. It talks to an AI model to perform a goal-based operation using tools and resources.

The distinction between traditional AI models and agents is subtle but profound. When we interact with an AI model e.g. Gemini, o1, Sonnet or similar large language models, we're essentially engaged in a series of one-shot interactions: we provide input, the model processes it, and returns output. While these interactions can be sophisticated, they're fundamentally reactive and stateless. Each response exists in isolation, without true continuity or the ability to take independent action.

AI agents, by contrast, are autonomous systems designed to perceive their environment, make decisions, and take actions to achieve specific goals - all while maintaining context and adapting their approach based on results.

This might sound like a minor distinction, but it represents a fundamental shift in how AI systems operate and what they can achieve.

Consider how many of us use AI chat interfaces today. You might ask ChatGPT to write an article from start to finish and get a one-shot response. You probably need to do some work to iterate on it yourself. An agentic version is more nuanced - an agent might write an outline, decide if research is needed, write a draft, evaluate if it needs work and revise itself. Let’s look at some examples of agents in action.

When you ask an AI model to help you analyze some data, it can suggest approaches and even write code, but it can't actually execute that code or interact with your data directly. An AI agent, on the other hand, can actively work with your data: loading files, running analyses, generating visualizations, and even suggesting and implementing improvements based on the results.

This becomes even more powerful in agent-to-agent workflows. Consider a data analysis project where multiple agents collaborate: A data preparation agent might clean and normalize your raw data, passing it to an analysis agent that applies statistical methods and identifies patterns. This agent might then collaborate with a visualization agent to create compelling representations of the findings, while a documentation agent records the methodology and results. Finally, a review agent might validate the entire workflow and suggest improvements or additional analyses.

Each agent in this workflow maintains its own context and expertise while coordinating with others through structured protocols.

They can handle edge cases dynamically - for instance, if the analysis agent discovers data quality issues, it can request specific cleanups from the preparation agent, or if the visualization agent identifies an interesting pattern, it can suggest additional analyses to explore it further. It's no longer just the difference between having a consultant who can give advice and having a colleague who can help complete the work - it's like having an entire team of specialists working together seamlessly on your behalf.

Back to one-shot prompts vs. agents: when we talk about AI for code generation, most of us are used to the ‘prompt and response’ approach. You give the AI a prompt like “write me code that does X” and it responds with some code.

An agent takes a more nuanced approach. It can outline the logic, checks the code, runs tests, detect bugs and then rethink and fix them if something doesn’t work. This iterative process mimics how engineers tackle problems, and it delivers better results. The AI becomes a collaborator, not just a pure generator of outputs.

This autonomy and ability to take action is what sets agents apart from even the most sophisticated language models. This shift enables automation of the "long tail" of tasks that were never worth building traditional automation for - everything from organizing files across multiple systems to monitoring data feeds for specific patterns and coordinating complex workflows across multiple applications.

Why am I personally excited about agents?

They have an elastic capacity to tackle work that organizations previously couldn't afford to address - not from replacing existing systems or people.

Agents shift the paradigm from software as a tool to software as a worker. This may be an entirely new relationship between enterprises and their tech stack.

Agents may decouple software value from end-user volume. You're no longer constrained by how much work your human team can accomplish.

Agent use-cases such as “Deep Research” and Agent-driven coding save me so much time already. This is unlocking time for me to focus on what AI can’t.

The emergence of standardized protocols for AI agent interoperability

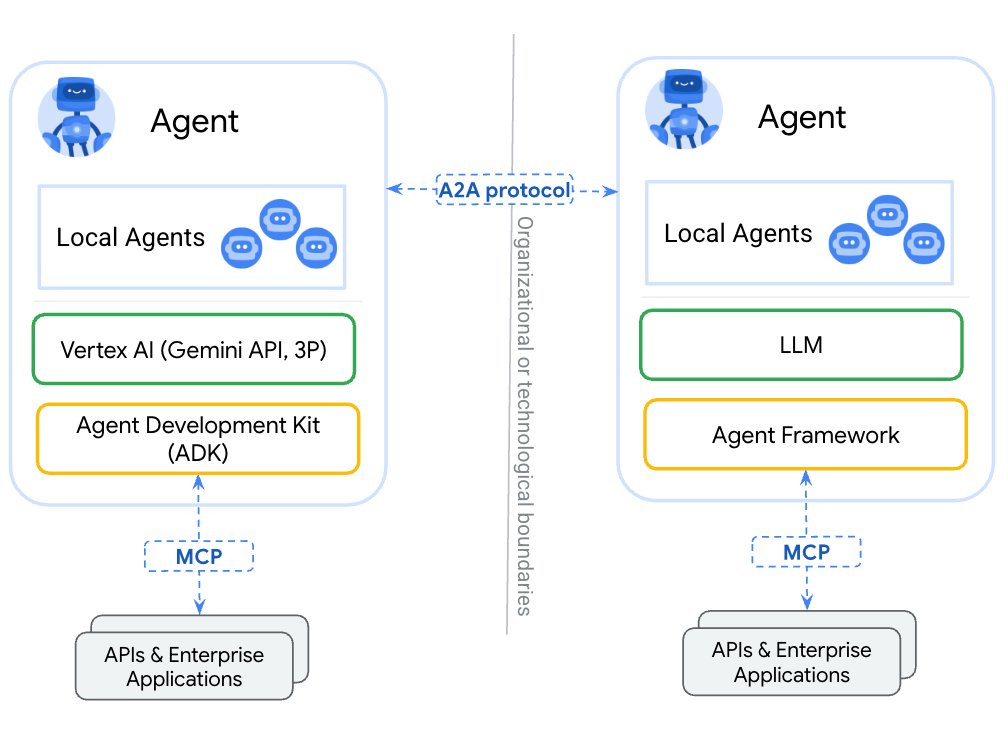

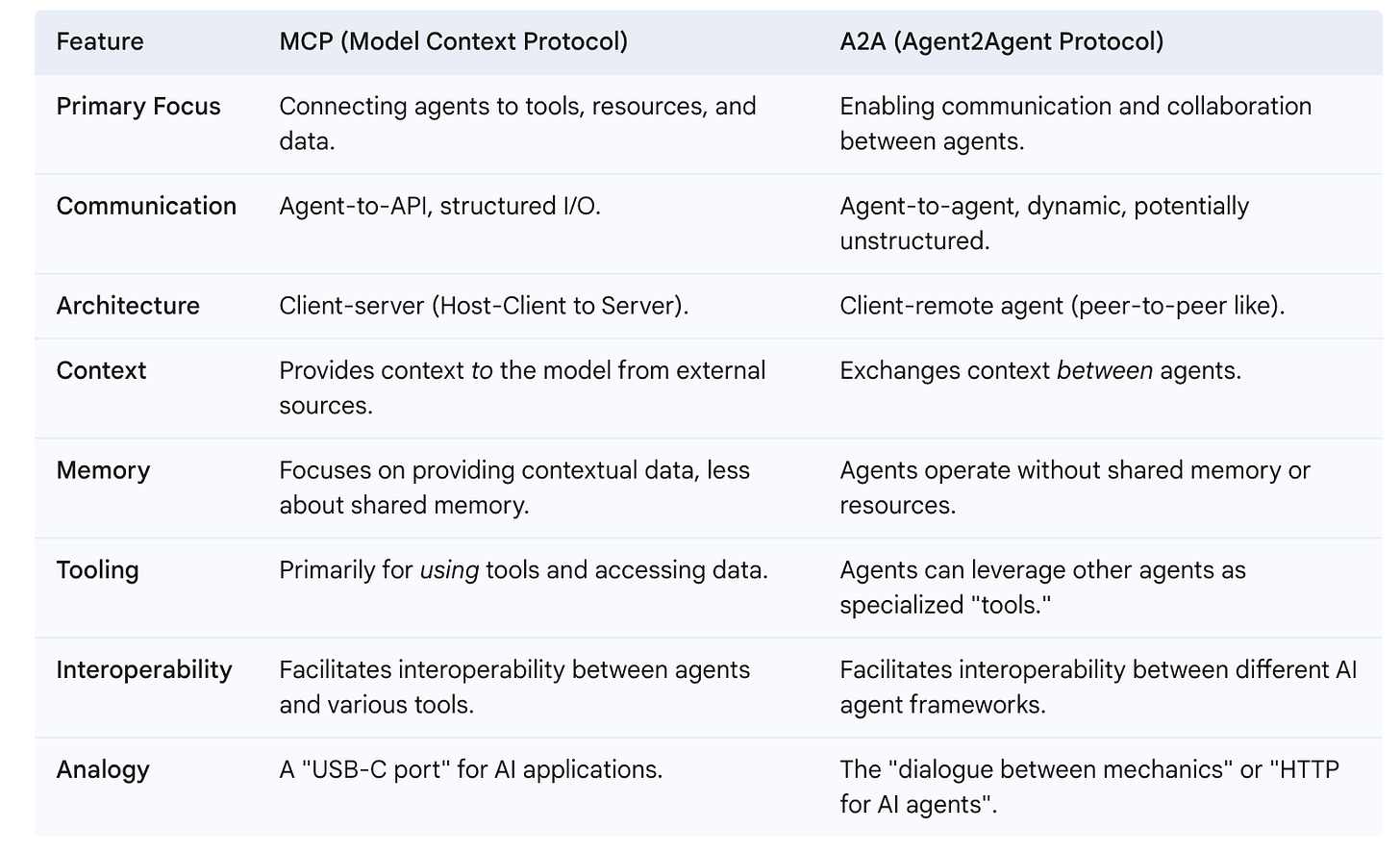

The ability of AI agents to operate autonomously and collaboratively necessitates the development and adoption of standardized communication protocols to ensure seamless interoperability and the creation of sophisticated multi-agent systems. The absence of such standards could lead to fragmented ecosystems, hindering the potential for complex task automation and cross-platform collaboration. There are two key developments here worth keeping in mind.

Model Context Protocol (MCP): Introduced by Anthropic, MCP is an open standard designed to connect AI models to external tools and data sources (it is “USB-C for AI integrations”). It enables AI assistants to directly access and interact with various datasets, enhancing their information retrieval and task execution capabilities. For instance, MCP allows an AI assistant to connect directly to platforms like GitHub to create repositories and manage pull requests efficiently. Learn more about MCP with lots of practical examples in MCP: What it is and why it matters.

Agent2Agent Protocol (A2A): Recently announced by Google, A2A is an open standard aimed at facilitating seamless communication and collaboration between AI agents from different vendors and frameworks. It allows agents to securely exchange information and coordinate actions across various enterprise platforms, promoting interoperability and enhanced automation.

Here’s an example of A2A working via Google’s Agentspace:

To keep it simple: MCP connects agents to tools (think agent-to-API) while A2A enables agents to talk to other agents.

Beyond simple automation: understanding agent capabilities

The capabilities of AI agents extend far beyond simple automation scripts or chatbots. They can:

Maintain persistent context and memory across multiple interactions, allowing them to learn from experience and adapt their approach over time.

Use tools and interact with external systems, whether that's through APIs, web browsers, or direct system interactions.

Break down complex goals into manageable sub-tasks and execute them in a logical sequence.

Monitor their own progress and adjust strategies based on results.

Collaborate with other agents, each specializing in different aspects of a larger task.

This combination of capabilities enables agents to handle complex, multi-step tasks that would be difficult or impossible for traditional AI systems.

This level of autonomy and capability for sustained, goal-directed action represents a fundamental advance in how AI systems can assist with complex tasks.

Agent Recipes is a site to learn about agent/workflow recipes with code examples that you can easily copy & paste into your own AI apps.

Agent patterns and architectures: the building blocks of autonomous systems

The emergence of AI agents has given rise to several distinct architectural patterns, each solving different aspects of the autonomous decision-making and action-taking challenge. Understanding these patterns is crucial for grasping both the current capabilities and limitations of agent systems, as well as their potential future evolution.

Tool use and integration

The most fundamental pattern in agent architecture is tool use - the ability to interact with external systems and APIs to accomplish tasks. This is about understanding when and how to use different tools effectively. Modern agents can interact with everything from database systems to development environments, but what's particularly interesting is how they choose which tools to use and when.

For instance, when an agent is tasked with analyzing sales data, it might need to:

Use file system APIs to access raw data

Employ data processing libraries for analysis

Leverage visualization tools to create reports

Utilize communication APIs to share results

The sophistication lies not in the individual tool interactions, but in the agent's ability to orchestrate these tools coherently toward a goal. This mirrors human cognitive processes - we don't just know how to use tools, we understand when each tool is appropriate and how to combine them effectively.

Memory and context management

Perhaps the most significant architectural challenge in agent systems is managing memory and context. Unlike stateless LLM interactions, agents need to maintain an understanding of their environment and previous actions over time. This has led to several innovative approaches:

Episodic Memory: Agents maintain a record of past interactions and their outcomes, allowing them to learn from experience and avoid repeating mistakes. This isn't just about storing conversation history; it's about extracting and organizing relevant information for future use.

Working Memory: Similar to human short-term memory, this allows agents to maintain context about their current task and recent actions. This is crucial for maintaining coherence in complex, multi-step operations.

Semantic Memory: Long-term storage of facts, patterns, and relationships that the agent has learned over time. This helps inform future decisions and strategies.

Hierarchical planning and execution

One of the most sophisticated patterns emerging in agent architecture is hierarchical planning. This approach breaks down complex goals into manageable sub-tasks, creating what amount to cognitive hierarchies within the agent system. This mimics how human experts approach complex problems - breaking them down into smaller, manageable pieces.

The hierarchy typically consists of:

Strategic planning: High-level goal setting and strategy development

Tactical planning: Breaking strategies into specific, actionable tasks

Execution planning: Determining the specific steps needed for each task

Action execution: Carrying out the planned steps and monitoring results

This allows agents to manage complexity that would be overwhelming with a flat architecture. For instance, an agent tasked with "improve our website's performance" might:

At the strategic level: Decide to focus on load time and user experience

At the tactical level: Plan to optimize JavaScript / long tasks, compress images and improve server response time

At the execution level: Detail specific steps for each optimization

At the action level: Actually implement the changes and measure results

Multi-Agent systems and collaboration

Perhaps the most intriguing pattern emerging in agent architectures is the development of multi-agent systems. These systems distribute cognitive load across multiple specialized agents, each handling different aspects of a complex task. This pattern has emerged as a natural solution to the limitations of single-agent systems, much as human organizations evolved to handle complex tasks through specialization and collaboration.

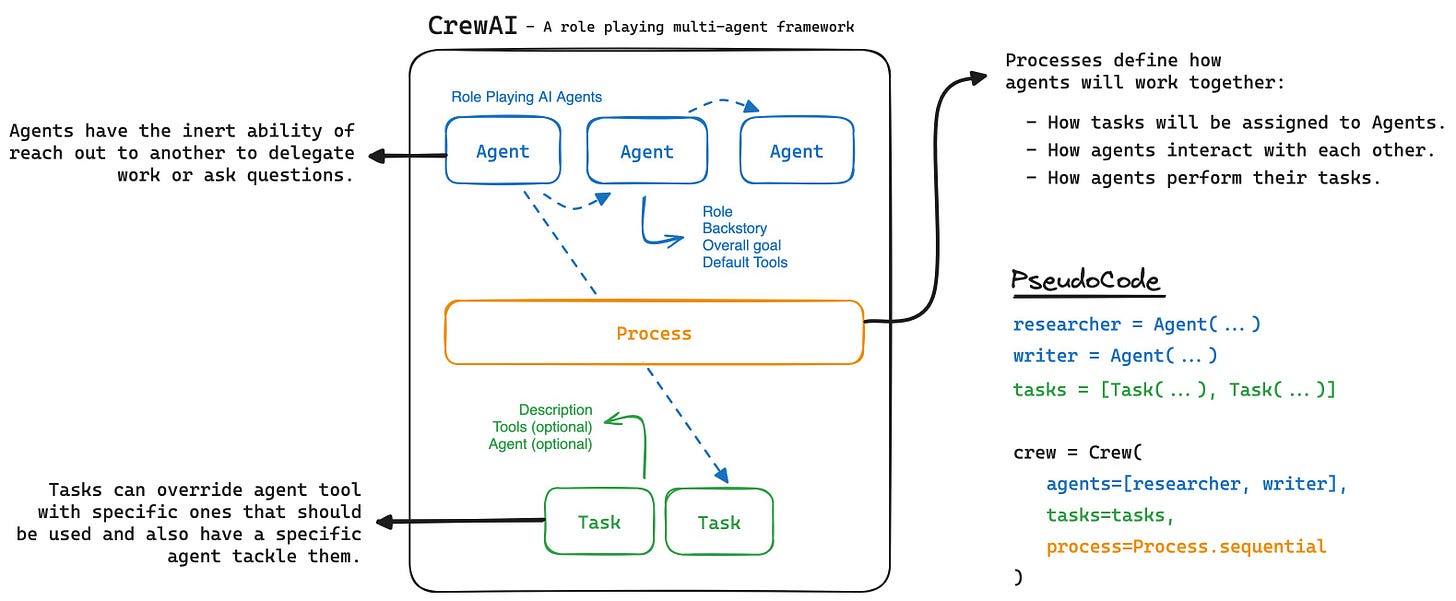

Microsoft's AutoGen and the open-source CrewAI framework exemplify this approach, allowing developers to create teams of agents that work together on complex tasks. A typical multi-agent system might include:

A coordinator agent that manages overall task flow and delegation

Specialist agents with deep knowledge in specific domains

Critic agents that review and validate work

Integration agents that handle communication between other agents and external systems

The power of this approach lies in its ability to break down complex cognitive tasks into manageable pieces while maintaining coherence through structured communication and collaboration protocols. For example, in a software development context, you might have:

A requirements agent that interfaces with stakeholders and maintains project goals

A design agent that creates technical specifications

Multiple development agents working on different components

Testing agents that verify functionality

Documentation agents that maintain technical documentation

Pictured is CrewAI which allows you to create and manage teams of agents.

The interaction between these agents isn't simply a matter of passing messages; it involves sophisticated protocols for negotiation, consensus-building, and conflict resolution. This mirrors human organizational structures but with the advantage of perfect information sharing and consistent execution of established protocols.

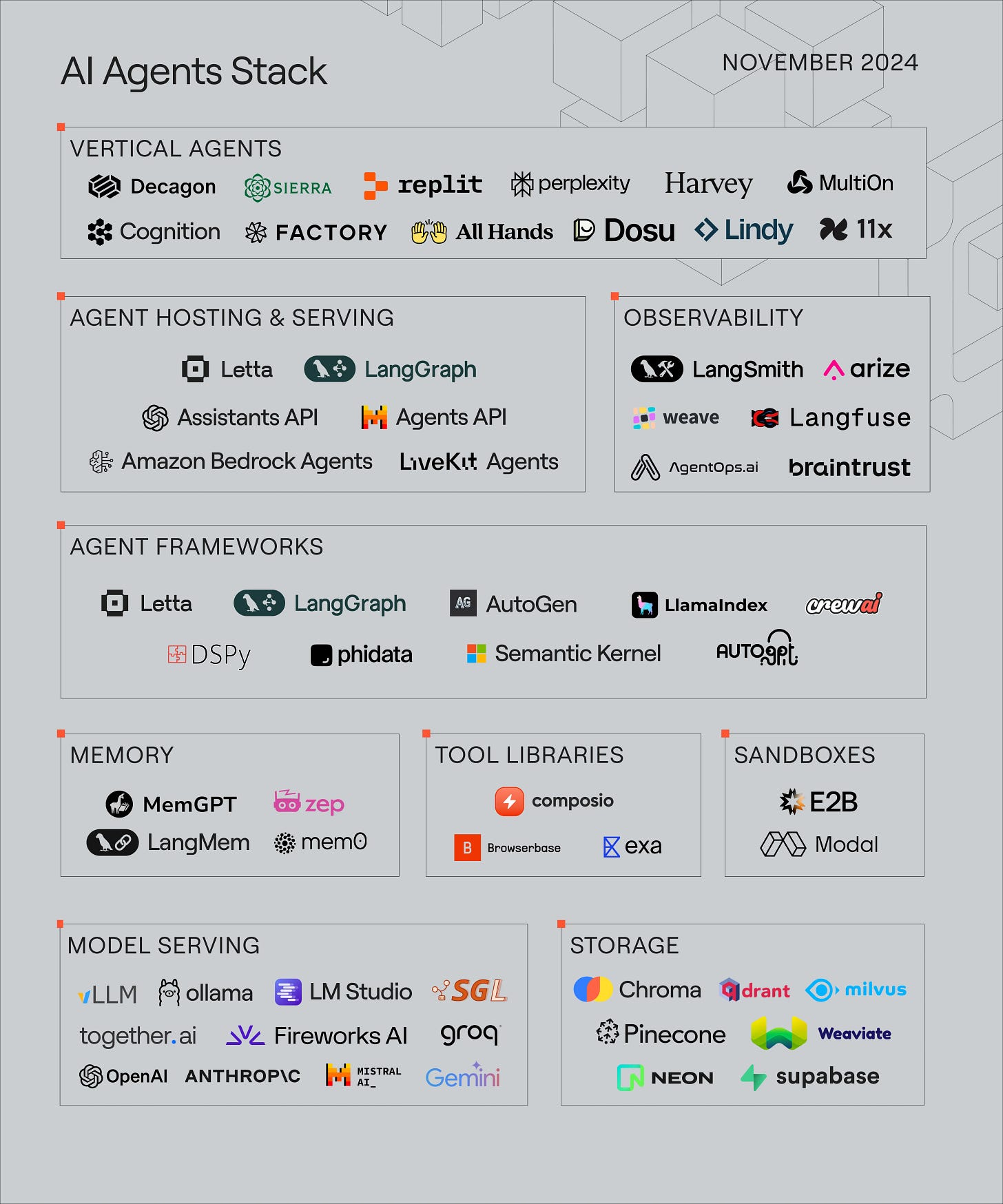

The AI agents stack organized into three key layers: agent hosting/serving, agent frameworks, and LLM models & storage.

The browser agent ecosystem: bridging AI and the web

The emergence of browser-based AI agents represents (imo) one of the most significant developments in the agent ecosystem. These agents can interact with web interfaces just as humans do - navigating pages, filling forms, extracting information, and executing complex workflows. This capability fundamentally changes the landscape of web automation and interaction, opening up possibilities that were previously impractical or impossible.

The current landscape

The browser agent ecosystem is currently divided into several distinct approaches, each with its own strengths and trade-offs:

Proprietary solutions

Google's Project Mariner represents one end of the spectrum - a tightly integrated solution built on top of Chrome and powered by Gemini. While still experimental, it showcases the potential for browser agents to become a native part of our web experience. I was fortunate to have had a chance to work with Mariner on bringing their vision to life.

OpenAI's Operator takes a different approach, focusing on general-purpose web interaction through a model called Computer-Using Agent (CUA). It's particularly notable for its advanced vision and reasoning capabilities, allowing it to understand and interact with complex web interfaces. The integration with GPT-4 provides sophisticated decision-making capabilities, enabling it to handle complex tasks like booking travel arrangements or managing e-commerce transactions.

Video: Rowan Cheung

Open Source alternatives

At the other end of the spectrum, projects like Browser Use and Browserbase's Open Operator are democratizing browser automation capabilities.

Browser Use is an open-source project designed to enable AI agents to interact directly with web browsers, facilitating the automation of complex web-based tasks. By leveraging this tool, AI models can autonomously navigate websites, perform data extraction, and execute various web operations through a user-friendly interface. It identifies and interacts with web elements without manual configuration and is compatible with various large language models (LLMs).

Demo: "Read my CV & find ML jobs, save them to a file, and then start applying for them in new tabs, if you need help, ask me."

The team behind Browser Use also now have a Cloud-based version available.

Browserbase’s Open Operator is an open-source tool designed to automate web tasks through natural language commands. By interpreting user instructions, it performs actions within a headless browser environment, streamlining complex web interactions. Open Operator leverages Browserbase’s cloud-based infrastructure.

Skyvern utilizes large language models (LLMs) and computer vision to automate browser-based workflows. It adapts to various web pages, executing complex tasks through simple, natural language commands. They’ve been exploring how more third-party integrations could be seamlessly incorporated into an agent workflow.

These open-source solutions offer several advantages:

Transparency: Users can inspect and modify the code, understanding exactly how the agent makes decisions

Customization: Organizations can adapt the tools to their specific needs

Cost Control: No dependency on proprietary API pricing

Community Development: Rapid iteration and improvement through community contributions

The Browserbase ecosystem, in particular, has gained traction for its Stagehand framework, which provides a robust foundation for building custom browser agents. It allows developers to create sophisticated automation workflows while maintaining control over the entire stack.

Beyond simple automation

What makes browser agents particularly interesting is their ability to go beyond traditional web automation. As Aaron Levie points out:

"AI Agents that can spin up infinite cloud browsers aren't for doing things that we already do just fine with APIs. They'll be used for the long tail of tasks that we never got around to wiring up with APIs before."

This insight gets to the heart of why browser agents matter. Consider some examples:

Complex research tasks:

An agent can navigate multiple websites, cross-reference information

Extract relevant data while maintaining context

Synthesize findings into coherent reports

All without requiring specific APIs or integration points

User interface testing:

Agents can systematically explore web applications

Identify usability issues

Test edge cases

Document bugs and inconsistencies

All while understanding context and user experience principles

Content management:

Agents can monitor multiple sites for changes

Update content across platforms

Ensure consistency in branding and messaging

Handle the tedious aspects of digital presence management

“Deep Research” is one of my favorite implementations for complex research and I’ve enjoyed using OpenAI’s deep research as well as Google’s Deep Research (really good these days).

Technical implementation and challenges

The implementation of browser agents presents several unique technical challenges:

Visual understanding: Agents must interpret complex visual layouts and understand UI elements in context. This requires sophisticated computer vision capabilities combined with semantic understanding of web interfaces.

State management: Web applications can have complex state transitions and dynamic content. Agents need to track these states and understand how their actions affect the application.

Error handling: Web interfaces can be unpredictable, with elements that change position, disappear, or behave differently based on conditions. Agents need robust error handling and recovery mechanisms.

Security considerations: Browser agents with the ability to interact with web interfaces pose unique security challenges, particularly around credential management and sensitive data handling.

Economic and strategic implications

The rise of browser agents has significant implications for how we think about web applications and services. As Levie notes:

"The friction in doing so is so high that by the time you get around to it you probably just end up doing the task manually anyway. Now, you just spend a couple bucks to see if the workflow works and then scale it."

This economic reality could reshape how we think about:

API development:

Companies might prioritize human-friendly interfaces over API development

The "API-first" paradigm might shift to "browser-first" for certain use cases

New patterns of integration could emerge based on agent interaction

Service design:

Web interfaces might evolve to be more agent-friendly while maintaining human usability

New standards could emerge for agent-interface interaction

Services might offer specialized interfaces for agent interaction

Cost ctructures:

The economics of automation could shift dramatically

New pricing models might emerge based on agent usage

The cost-benefit analysis of automation could change for many tasks

Real-world applications and impact

The transition of AI agents from theoretical constructs to practical tools is already underway, with applications emerging across multiple domains. While it's easy to get caught up in the potential of the technology, it's crucial to examine current implementations critically, understanding both their successes and limitations.

Software Development and Engineering

The impact of AI agents in software development provides perhaps the clearest picture of their current capabilities and limitations. We may see a shift from simple code completion to "AI teammates" that can participate throughout the development lifecycle.

Current implementation examples:

Code Generation and Review:

Agents can now generate entire components or services based on natural language descriptions

They can review pull requests, identifying potential issues and suggesting improvements

More sophisticated agents can maintain context across multiple files and understand project-specific conventions

Testing and quality assurance:

Automated test generation based on code changes

UI testing through browser interaction

Performance profiling and optimization suggestions

Documentation and maintenance:

Automatic documentation updates based on code changes

API documentation generation and maintenance

Code refactoring suggestions based on usage patterns

What's particularly notable is how these implementations have evolved beyond simple automation. For instance, an agent might notice a pattern in how developers handle certain edge cases and proactively suggest standardizing this approach across the codebase. This level of contextual awareness and proactive assistance represents a significant advance over traditional development tools.

Enterprise Automation and Business Processes

In the enterprise context, AI agents are beginning to tackle the "long tail" of automation tasks that traditional approaches couldn't economically address.

Current Applications:

Document Management:

Automated file organization across multiple systems

Content categorization and tagging

Metadata management and updates

Cross-platform content synchronization

Customer Service:

Multi-step query resolution

Proactive issue identification

Cross-system information gathering

Contextual response generation

Workflow Automation:

Complex approval processes

Multi-system data reconciliation

Compliance monitoring and reporting

Resource allocation and scheduling

The key difference from traditional automation is the ability to handle exceptions and edge cases. For instance, when organizing files, an agent can recognize when the same entity is referred to by different names across systems and make intelligent decisions about categorization.

Challenges and Limitations: The Reality Check

While the potential of AI agents is significant, it's crucial to understand their current limitations and challenges. This isn't just about technical constraints – it's about fundamental issues that need to be addressed for the technology to realize its full potential.

Technical challenges

Reliability and consistency

The most immediate challenge facing AI agents is reliability.

Unlike traditional software that follows deterministic rules, agents can exhibit unpredictable behavior, particularly when facing novel situations. This manifests in several ways:

Hallucinations and False Confidence:

Agents can make incorrect assumptions about their environment

They might execute actions based on misunderstood context

There's often no built-in mechanism to recognize when they're operating outside their competence

Error Propagation:

In multi-step tasks, small errors can compound

Recovery from intermediate failures remains a significant challenge

Long-running tasks are particularly vulnerable to cascading failures

Context Management:

Maintaining accurate state across long sequences of operations

Handling interruptions and resuming tasks

Managing multiple concurrent operations reliably

As Levie points out:

"How do we ensure accuracy on results and not have incremental hallucination or mistakes at each step?"

This remains one of the core challenges in agent development.

Security and privacy concerns

The autonomous nature of agents raises significant security and privacy concerns:

Access control:

Agents often need broad system access to be effective

Traditional security models aren't designed for autonomous actors

Managing credential delegation safely is complex

Data privacy:

Agents may handle sensitive information across multiple systems

Privacy boundaries can be unclear in complex workflows

Data retention and disposal policies need rethinking

Audit and accountability:

Tracking agent actions for compliance

Establishing responsibility for agent decisions

Managing liability in automated processes

Economic and practical limitations

The economics of AI agents present their own challenges:

Computational costs:

Running sophisticated agents requires significant computing resources

Cost per operation can be high compared to traditional automation

Scaling economics aren't yet clearly understood

Integration overhead:

Adapting existing systems for agent interaction

Training and maintenance costs

Development of agent-friendly interfaces

Skill requirements:

Effective agent deployment still requires significant expertise

Understanding agent limitations and capabilities

Managing and monitoring agent operations

Conclusion: The path forward

AI agents represent a fundamental shift in how we interact with technology. While current implementations have limitations, we're clearly moving toward a future where autonomous AI agents will be integral to how we work, create, and solve problems.

The key to successful adoption lies in understanding both the potential and limitations of these systems. As Levie notes:

"The next decade will be defined by how well we collaborate with AI - not just how smart it is."

This suggests that success depends not just on technical advancement, but on developing frameworks for deployment and integration that unlock entirely new categories of value in areas of "non-consumption" where work simply wasn't being done before.

For developers, business leaders, and technologists, the imperative is to engage with this technology thoughtfully. Understand its capabilities and boundaries, but also expand your imagination beyond automating existing workflows to envision what was previously impossible. When the value of technology is no longer constrained by human bandwidth, the opportunities for innovation explode.

The age of AI agents is unfolding now.

The question isn't whether they will transform how we work with technology, but how we shape that transformation. As we navigate this transition, we need both awareness of the challenges and the courage to reimagine what's possible when software becomes an autonomous partner rather than just a tool.

Very nice article highlighting everything about AI Agents. Thanks Addy Osmani for this wonderful writeup.

So what’s the role of the human engineer 👷♀️ in this agentic era?